03

Fix Robots.txt Errors - Free Generator and Validator Tool

Create a perfect robots.txt file to control search engine crawlers and protect sensitive areas of your website. Our free tool at A10ROG helps you generate, validate, and test robots.txt rules instantly. Prevent SEO mistakes with our easy-to-use generator.

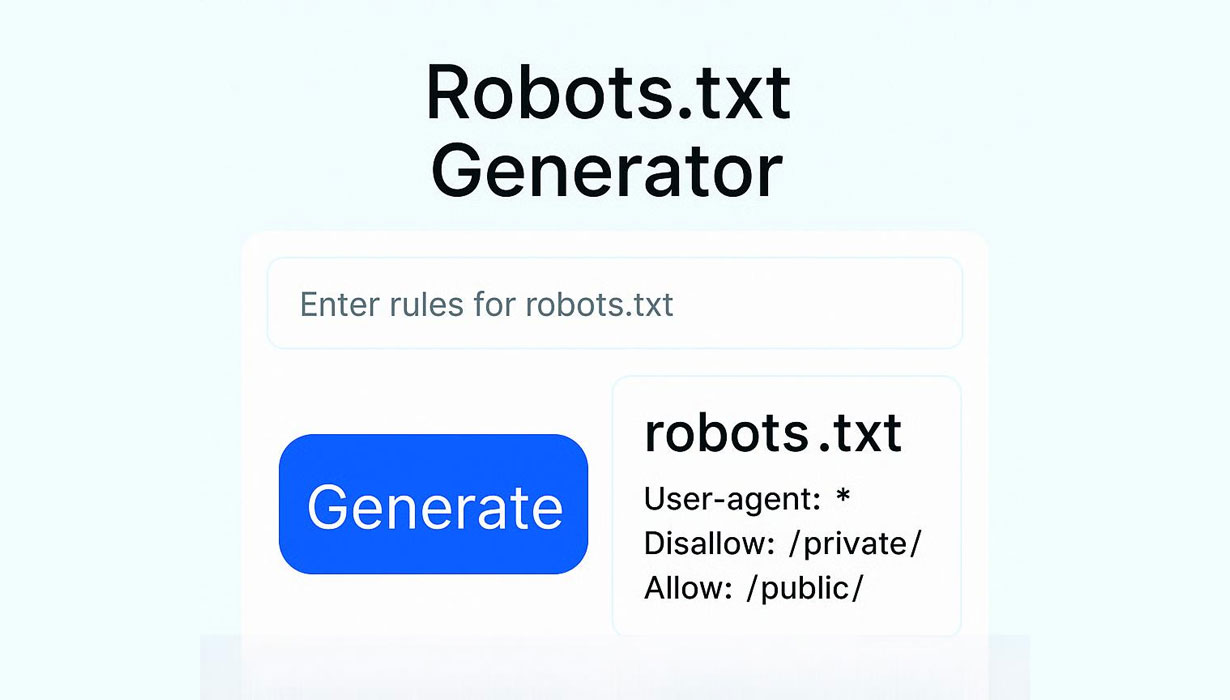

Free Robots.txt Generator & Validator

"Take control of how search engines crawl and index your website with A10ROG's Robots.txt Generator. A correctly configured robots.txt file is crucial for SEO health, helping you manage your crawl budget, prevent duplicate content issues, and keep private sections of your site out of search results. Our tool makes it easy to create a error-free file in minutes, even with no technical experience."

How to Use Our Robots.txt Generator

- Configure Access: Use simple checkboxes and dropdowns to Allow or Disallow major search engine bots (like Googlebot).

- Set Directives: Specify folders or pages you want to block (e.g., /admin/, /tmp/).

- Add Sitemap: Input your sitemap URL to help crawlers discover your pages faster.

- Generate & Validate: Click "Generate" to get your perfect robots.txt code. Our built-in validator will check it for syntax errors.

- Download or Copy: Instantly download the .txt file or copy the code to your clipboard.

What is a Robots.txt File and Why is it Important?

The robots.txt file is a standard used by websites to communicate with web crawlers and other web robots. It tells them which areas of the site should not be processed or scanned.

Key Benefits of a Proper Robots.txt File

- Manage Crawl Budget: Guide Googlebot to your most important pages, ensuring they are crawled efficiently.

- Block Private Content: Keep login pages, admin areas, and staging sites out of search results.

- Prevent Duplicate Content: Block search engines from indexing internal search result pages or print-friendly versions.

- Specify Sitemap Location: Point crawlers directly to your XML sitemap for faster indexing.

Common Robots.txt Directives Our Generator Handles

- User-agent: Specify which crawler the rules apply to (e.g., * for all, Googlebot for Google).

- Disallow: Block a crawler from a specific folder or page (e.g., Disallow: /private/).

- Allow: Override a Disallow rule for a specific subdirectory or file.

- Sitemap: Indicate the full URL of your XML sitemap.

Why Use the A10ROG Robots.txt Generator?

- Eliminate Syntax Errors: Avoid costly mistakes that can accidentally block your entire site. Our tool generates valid code every time.

- User-Friendly Interface: No need to learn complex syntax. Generate a professional robots.txt file through a simple visual guide.

- Built-in Validation: Our integrated robots.txt validator checks your rules for common errors before you even implement them.

- Best Practices Included: Get pre-configured options for common platforms like WordPress, ensuring you follow SEO best practices from the start.

- 100% Free & Instant: Generate and download your file immediately, no account required.

Frequently Asked Questions (FAQ)

Q: Where should I upload the robots.txt file?

A: It must be placed in the root directory of your website (e.g., www.yoursite.com/robots.txt).

Q: Can robots.txt completely block a page from Google?

A: No. It is a request, not a enforcement. To truly block a page from search results, use the noindex meta tag or password-protect the page.

Q: What is the correct format for a robots.txt file?

A: Our generator ensures the correct format. A basic example is:

User-agent: * Disallow: /private/ Allow: /public/ Sitemap: https://www.yoursite.com/sitemap.xml

Master Technical SEO with A10ROG

Once your robots.txt is set, continue optimizing your site with our suite of free SEO tools. Check out our Meta Tags Generator, XML Sitemap Generator, and HTACCESS Redirect Generator to complete your technical SEO audit.

Contact

Missing something?

Feel free to request missing tools or give some feedback using our contact form.

Contact Us